Tuesday, June 22, 2010

This Site has been moved to http://victorfang.wordpress.com/

http://victorfang.wordpress.com/

The reason is that, some countries like Chinese government banned blogger site.

中国古代音乐

中国古人为何如此设计?这根源于民族性。中国传统文化注重的是稳重,大气,中庸,而五声调式的五个音,无论如何排列,都让人感到某种程度的和谐,相对于巴赫所奠基的十二平均律,由于很多半音的存在,增大了冲突的可能性。

但是,可笑的是,正是这种和谐,成为中国古代音乐发展的桎梏。就像一头笨重的大象,每步都很沉重而又充满了可笑的自豪,导致到了无法改正的地步。而巴赫所奠定的框架,提供了一个很科学和包容的设计,虽然相对于某些特别的乐器比如小提琴来说还是不够精确,但是足以规范近现代音乐的发展。

CS Highschool Summer Camp 2010

Fun to watch them code!

Young men on the road!

Monday, June 14, 2010

Future of PC

Amazing, right?

Lots of modern information technologies rely on this mechanism and architecture, and meanwhile, people are more likely and happier to generate their word bytes on the cloud.

In the future, maybe less than 10 years, PC will disappear and fade out like some old-fashioned tech. Those ISP companies like TimeWarner, AT&T, would just provide you a FREE simple "device" like a light weight netbook, (maybe you need to purchase your own screen for <200$ ) and with a cable, for a 50$/month contract. High performance CPU like the one you use to fire these web surfing, will only remain in labs or mainframes. All computation demanding app will be moved into the clouds, and you won't know your piece of blog sit where, one thing you would be sure is, whenever you retrieve it, it would come to you.

音乐词汇小集

Adagio: 慢板 常见于交响曲或协奏曲的第二乐章,也可以是慢速乐曲的名称,

Aria:咏叹调,抒情调 配有伴奏的独唱曲,是歌剧或清唱剧里的歌曲,

Cadenza:华采乐段 协奏曲或歌剧咏叹调接近尾段时,由独奏/唱者卖弄技巧的过门。

Cantata:清唱剧 以宗教文字为歌词,配以器乐伴奏的乐曲,

Chamber Music:室乐 三至十五件乐器合奏的器乐曲,如三重奏(Trio),四重奏(

Chamber Orchestra:室乐团 约十五至二十五人组成的小型管弦乐团。

Chanson:歌曲 法文歌曲的意思。

Chorale:圣咏曲 德国新教或路得教会的一种感恩赞美诗歌。

Coda:尾奏 拉丁文尾巴的意思。一个乐章/曲的终结乐段。

Concerto:协奏曲 巴罗克时期发展出来的曲种,

Concerto Grosso:大协奏曲 独奏协奏曲的先驱。比古典或浪漫时期的协奏曲结构规模较小,

Opera:歌剧 谱成音乐的戏剧,音乐,台词和剧情都具有同等的重要性。

Seria),诙谐歌剧(Opera Buffa),美声歌剧(Bel Canto

Opera),轻歌剧(Operetta),德国轻歌剧(

Comique)等。

Opus(Op.):作品编号 拉丁文作品的意思。作曲家或音乐出版商以此编排作品,

Oratorio:神剧 原意是指祈祷室,十六世纪发展成的一种音乐类型。与歌剧相似,

Orchestra:管弦乐团 十五人以上组成的乐团。

Overture:序曲 法文开放的意思,是歌剧或神剧等同类作品开始时的器乐前奏。

Overture),是一种独立的管弦乐作品。

Partita:组曲 原是一种主题与变奏(Theme and Variations)的曲式,巴罗克时期演变成组曲的意思。

Plainsong:素歌 (请参阅Gregorian Chant)。

Polyphony:复音音乐 两个或以上独立声部组合的音乐。

Prelude:前奏曲 浪漫时期用于歌剧或芭蕾舞剧每一幕的前奏,有时更取代序曲。

Programme Music:标题音乐 以纯音乐描述一个标题甚至故事的器乐曲。

Recitative:宣叙曲 在歌剧或神剧中以歌唱方式说话。与咏叹调比较,宣叙调着重叙事,

Requiem:安魂曲 天主教礼仪为死者送葬的弥撒曲。

Rhapsody:狂想曲 浪漫时期一种形式自由的曲种,

Rondo:回旋曲 古典时期一种节奏轻快的曲式。

Scherzo:诙谐曲 意大利文玩笑的意思。十九世纪由贝多芬发展出来,取代小步舞曲(

Serenade:小夜曲 意指夜间的音乐。十八世纪时一种多乐章组成的器乐曲,

Sonata:奏鸣曲 意大利文声响的意思,有别于歌唱的声音。

Sonata)是为三件乐器而写的(其实是四人合奏的,

Sonata Form:奏鸣曲式

古典时期发展出来的一种曲式,常有于交响曲或协奏曲的第一乐章,

(Recapitulation)组成。

Suite:组曲 巴罗克时期的组曲大多是由一系列舞曲组成的。在浪漫时期,

Suite)。

Symphonic Poem:交响诗 浪漫时期的一种单乐章管弦乐曲。

Symphony:交响曲 古典时期发展出来的重要曲种,其实就是管弦乐奏鸣曲,

Tempo:速度 意大利文时间的意思,泛指乐曲演奏时的速度,常见的有:

Theme and Variation:主题与变奏 (请参阅Variation)。

Toccata:触技曲 意大利文触碰的意思,是一种表现演奏者技巧的器乐独奏曲,

Tone Poem:音诗 与交响诗(Symphonic Poem)同义。

Variation:变奏曲 以同一主题(Theme)作一连串变奏的乐曲。在每个变奏中,

Voices:声部,人声 人声可依音域分成大类:女高音(Soprano),女中音(

(Baritone)和男低音(Bass)。

Soprano)和抒情男高音(Lyric Tenor)。

《速度、力度、表情记号》

---速度记号---

Largo 最缓板

Aentoq 缓

Adagio 慢板

Larghetto 甚缓板

Andamtte 行板

Andantino 小行板

Moderato 中板

Allegretto 稍快板

Allegro(All"o)Moderato 中庸的快板

Allegro 快板

Allegro Assai 很快的快板

Allegro vivace 活泼的快板

Vivace 甚快板(活泼的)

Presto 急板

Prestissimo 最急板

Piu allegro 速度转快

Meno allegro 速度转慢

Piu mosso 更快

Poco a poco 逐渐地

Accelerado=accel渐快

Ritardando=rit. 渐慢

Rallentando=Rall.渐慢

A tempo 原速,速度还原

Tempo primo=Tempo I原速,速度还原

---力度记号---

pp pianissimo 最弱

p piano 弱

mp Mezzo piano 中弱

mf Mezzo forte 中强

f Forte 强

ff Fortissimo 非常强

cresc. 渐强

Crescendo 渐强

< 渐强

decresc. 渐弱

Decrescendo 渐弱

> 渐弱

dim Diminuendo 渐弱

poco 稍微、略

piu 更、愈

>^.Accento 加强地,突强,特重

sf Sforzando 加强地,突强,特重

fz forzatoo 加强地,突强,特重

rf.rfz.rinf. Rinforzando加强地,突强,特重

fp Forte piano 加强地,突强,特重

---表情记号---

Agitato 激动地,兴奋地

Animato 精神焕发地

Appassionnato 热情地,热烈地

Brillante 华丽的

Cantabile 如歌般的

Con brio 辉煌灿烂的

Con moto 活跃的,稍快的

Con spirito 精神抖擞的

Dolce 甜蜜的,温柔的

Doloroso 伤心的,悲痛的

Energiaco 用力的,加强的

Espressivo 富有表情的

Grave 极缓慢的

Grazioso 优雅的

Legato 圆滑的

Leggiero 轻快的

Maestoso 庄严的

Marcato 加强的

Molto 很,甚

Morendo 逐渐消失

Scherzando 诙谐的

Sostenuto 音要持续的

Subito 突然的,立刻的

ten.=tenuto 音要尽量保持

Tranquillo 安静的,平静的

Vivo 活泼

Sunday, June 13, 2010

Saturday, June 12, 2010

The Adventure of Lawrence Jiaqi Jin

工用8个月时间念完硕士。然后在

华尔街工作。最忙的时候,此人能长时间连续每天工作20小时。

From the California Institute of Technology to Citigroup Corporate and

Investment Banking

The Adventure of Lawrence Jiaqi Jin

《跳级在清华》

1.前言

每一个人都有自己的一段历史,每一个人的过往都可以写一本书。我所记录的,只是自

己简单而平凡的故事,而且我始

终相信,那些有理想,懂得把握自己的后来人们,一定会做得更加出色。我不知道道命

运会把我带到什么地方,但我一

直会用善良维护左右。谨以此文,纪念我逝去的学生时代。

2.记忆封存中的懵懂岁月

小学时候的我,无非只是淘气和贪玩,虽然每件事情都会努力认真做好,但是我并不是

好胜求强的那种人。直到很偶然

的因为《迎春杯》数学竞赛的缘故保送到北京市重点中学---北京五中之后,才渐渐开

始知道努力超越的道理。初中三

年级以后,我的成绩一直保持在年级前三名,并且渐渐在数学物理化学上面崭露头角。

除了大小的全国市区奖项得了不

少之外,由于高中数学物理联赛一等奖的缘故,我在2002年被保送入清华大学的基础科

学班。在这个清华内部堪称最苦

最累的地方开始了一段难忘的经历。

基础科学班是杨振宁教授在97年回国时候提议成立的,外界称其为”诺贝尔班”,《

Science》杂志也专门报道过。这个

班目标是培养有国际竞争力的新世纪人才。基科班每年从全国联赛保送生中选拔30人,

再从清华3500名新生中选拔30

人。所以成员不少是奥林匹克竞赛金牌和各省高考状元。仅基科2000班60人中就有5名

高考状元。竞争之激烈,学生质

量之高,可以想见。基础科学班的学生拥有其他院系学生没有的特权。选课所受限制少

,同时授课老师大多是该领域的

权威教授,此外这个班的学生在大三可以在全校乃至全国范围内选择教授作为导师,并

且开展富有挑战性的科研训练。

3.跳级尝试

进入基科班以后,前所未有的压力接踵而来。我所在的班,一共62人,4位国际奥林匹

克金牌得主,2名高考状元,剩下

的多是全国联赛决赛圈的一二等奖获得者。纵然我在中学里算是首屈一指的人物,但是

跻身清华精英中的精英里,实在

是逊色了不少。但是我一直有个信念,就是不管事情多难,我都要凭借自己的努力做到

最好。不管对手多强,我都要攀

登高峰脱颖而出。拥有这股决心,我很快开始了迅速上位的过程。我细心观察周围同学

身上的优点,大到思维方式,小

到课堂笔记,我都一一琢磨,争取尽量吸取其中精华并为己用。虽然我并不聪明,但是

凭着直觉和理想,我在普通物

理,高等微积分等大一课程上都取得了接近满分的成绩,并且在2003年冬天开始运作跳

级事宜,争取提前一年从清华大

学毕业,并且去到世界超一流大学进一步深造。

本科跳级对于很多人来讲想都没有想过,更何况是在清华最难出头的基础科学班。我认

真研究了整个大学四年的培养方

案,然后向学校提出了一套改编的计划,专门为我跳级所用。然而不管在哪里,保守势

力总是存在的,对于自主求新的

我来讲,因此遇到的阻力的确不少。直到我在大一下学期修了高年级的量子力学和数值

分析,并且获得接近满分的成

绩,又在电磁学考试半个年级不及格的情况下取得100分,阻碍我前行的声音才渐渐收

敛。

2003年暑假,在教务处和学校领导的支持下,我成功转入基科1字班,向着更

高的梦想继续迈进。

4.GPA之争

所谓GPA,也就是学分积,是国外大学衡量本科生表现的重要因素之一。为了不影响去世

界超一流大学,我对GPA自然是

倍加重视。但是因为跳级,我在大一就要选大三大四的课程,体育课每学期上两个不说

,小学期课程也要double。种种

困难一一袭来,但是我都悉心应对。拥有每天工作20小时的功底,我终于在跳级完成之

后依然在高年级位列最终综合排

名和专业排名的双料第一,为出国申请打下了好的基础。

在交叉选课的过程中,我渐渐发现了灵活带来的好处。很多高年级的课程对低年级课程

有指导意义,同时低年级课程中

的浅显例子又对理解高深的概念大有裨益。所以只要搭配得当,方式巧妙,看似很难的

跳跃交叉实际却也是有途可循

的。

5.本科科研尝试

最早选择保送基础科学班的一个重要原因就是这个班的学生可以在大三进入课题组师从

国内权威院士专家

进行科研训练,这无非对申请出国读graduate school提供了帮助。对我而言,因为跳

级少了一年,大二进入课题组便

成了必须,为此我花了很多功夫来了解清华各个院系的实验室,最后在教育部重点实验

室之一的量子信息与测量实验室

以及清华大学微电子所同时展开科研,此外还会利用空余去高等研究中心听报告并与教

授讨论问题。除了应付跳级带来

的冲击,我每天深夜看paper,并且尝试在seminar上面做presentations,最后终于在大

二结束前完成两篇关于quantum

information and quantumcomputation的文章并投Physical Review

《华尔街的随机游走》

6. 出国申请

在经过两年的课程学习及科研训练之后,我开始了申请之旅。除了GRE, TOEFL之外,

papers, personal statement,

recommendation letters都是重要因素。我当时就想,既然我能两年读完别人三年的东

西还拿到年级第一,其他几样也

就一定不能差。往往推荐信3封就够了,但是鉴于和各个研究所都保持联系,我可以得

到更多的推荐信,并且做到有的放

矢。最后清华大学校长顾秉林院士,物理系系主任朱邦芬院士,微电子所所长李志坚院

士,以及物理系和高等研究中心

另外4位教授都不吝推荐,对我出国起了不少正面影响。

然而不管怎样,由于我学了两年本科就申请graduate school,多少影响到外界对我实

力的信任。我只申请了全美最好

的15所学校,拿到8个offer, 被3个decline, 剩下的包括interview全部withdraw.

7. 从物理转电子工程

很早我就在电子工程和基础科学班之间犹豫过,电子工程在全国大热,不仅贴近实

际,而且对数学物理要求很高富有

挑战性。借着申请出国的机会,我拿加州理工做了个尝试直接申EE,结果拿到

fellowship。加州理工1999年曾排全美大

学排行榜第一名,物理,应用物理以及天文学在世界上更是首屈一指。EE也是她的工程

强项。最后比较再三,我决定拒

掉Berkeley, withdraw MIT的物理进入加州理工。05年8月,我在水木论坛上面写下《

跳级在清华---我的飞跃之旅》

一文,介绍自己在清华近3年的经历感受,9月上旬飞抵美国洛杉矶。

8. 我在加州理工,8个月的硕士

来到加州理工,很快感觉到了教授和周围同学身上蕴藏的智慧。学校比我想象

的还小,走走就出去了。然而卧虎藏

龙却是一点不假。加州理工是美国教育界公认的最累的地方之一,Nobel奖得主密度最

大,学生教授要想survive都要脱

一层皮才行。不过有了清华2年10个月的跳级经历,再难的情况我也有信心去处理。美

国大学很多都是一年3个

quarter,每个quarter 十周左右。加州理工的本科有名的难念,就是因为一个quarter

要选5-6门课程压力很大。一到

学校看了calendar,我发现只要每个quarter选8-9门课就可以在8个月里面拿2个硕士出

来,岂不是件好事。于是第一

个quarter我狂选9门课73学分的课程并且得了接近全A+的4.2 GPA, 但是后来由于学校

规定所限,一年double master

的计划只能叫停。

在加州理工的8个月里,我一面为了master degree努力,一面展开科研,在

network coding & wireless

communication领域的ISIT和ITA上发表国际论文。06年6月初我如愿毕业

9. 转入Yahoo! Inc. 开始business之路

对于academia,我一直心存怀疑,不知道适不适合自己。亲历清华和加州理工

,受到学术熏陶之后,我更加坚定

了这不是我要的轨迹。正因如此,finance 和engineering 便成了我的关注对象。刚好

我在加州理工修过Business,

Economics andManagement的课程,而且取得很好成绩并加入了相关club,所以经常能

够得到一些关于industry的信

息。06年4月底刚好搜索引擎三巨头之一的Yahoo! Inc.要人去做intern。我看机会难得

于是投简历过去,经过电话面试

和 on-site interview的多轮考验之后,我挤掉了东岸MIT的竞争对手,成功进入Yahoo

! Inc.

在Yahoo! Search Marketing, 我的工作和modeling, finance, statistics,

strategy都有关,是一个很富挑战性的

job。考虑到自己长远的志向在business和industry,我最后决定将summer intern转成

full-time position,开始一段新

的历程。经过多方努力,我最终转入Yahoo! Inc,这也同时意味着我的学生生涯就此告

了一个段落.

10. 进入花旗投行部, Random Walk on Wall Street

06年9月中旬summer internship告罄,在10月1号H-1b正式生效之前,我选择

了东部旅行的方案。来到向往已

久的New York City, 一头扎进Wall Street。位处WorldTrade Center和海港之间,

Wall Street空间虽小,但却是精英

荟萃。DB的大楼傲然矗立,60 Wall Street的大牌呈现眼前,NYSE铺上巨幅美国国旗,

又是一翻别样风景。Times

Square旁Morgan Stanley的大楼分外显眼,Park Ave.上JPMorganChase & Co. 和UBS相

映生辉。对于纽约这座世界

金融中心,满怀壮志的我自然格外喜欢。从subway中慢慢走出,看到落魄街头的景象又

不禁动容。当我们知道什么是贫

苦失落,才知世间多少苦难,亦有人承受。我欲飞天发奋,只图灿烂一瞬而已。

在从Boston回洛杉矶的飞机上,我就暗下决心,一定要进入真正的financial

industry,进入Wall Street的Bulge

Bracket,用自己的智慧和勇气,谱写新的篇章。梦想虽好,但是要想成真,又是谈何

容易。对于只来美国一年的我,没

有任何金融背景和Banking Experience,想要进入这么selective & competitive的领域

实在不很trivial。然而先前磨练已

然注定,看准的事情我就会全力以赴,直到微笑以对。纵然失败一两次,也要再爬起来

。

带着信心和理念,我开始了新的job hunting。对于I-bank和hedge fund,每

家面试都在3大轮10小轮以上。

Interviewers从quantitative skills, behavioral skills and teamwork spirits,

对industry的理解,brainteasers等很

多方面进行考察。 每个公司的culture都不一样,之前要做足research才行。为了

interview重访NYC,在Grand Haytt

Hotel里眺望曼哈顿的夜色,很快便被纽约的美所感染。不管蜕变也好,升华也罢,总

是不很轻松。在尝尽各种苦头之

后,我终于成功进入Citigroup Corporate and Investment Banking / SalomonSmith

Barney的纽约总部,掀开了在

华尔街的新篇章。Salomon Brothers曾是WallStreet上的中坚力量,后被Travelers

Group收购。Citigroup由Citicorp

和Travelers合并而成,目前位列世界500强中的第8位,Forbes Global 2000第一位,

是世界上最profitable的

financial services institution。Citigroup在debt, quantitative trading,

wealth management,

M&A等很多领域独树一帜,并以commercial banking为根基涉猎IB,在Wall Street上面

既与全能型的BOA, JPMorgan

Chase比拼, 又与传统投行Goldman Sachs,Morgan Stanley,Merrill Lynch等展开激

烈竞争。Citi在2005年夺走了

Thompson Financial League Table25个categories中的14项第一名。有幸能在这样优

秀的群体中学习发展,实在对

我是件益事。

11.再见洛杉矶

回想我在洛城的一年多时光,历经人世沉浮,每当事业跌到谷底,无不每日以泪洗

面,无助并有无奈,又有苦痛挣

扎。然而最初的梦想,一定会实现,这点我一直都不曾动摇。我明白生命已经打开,我

要哪种精彩,也知道如果骄傲没

被现实大海冷冷拍下,又怎懂得要多努力才走得到远方。就要飞去东海岸,多少有些不

舍。LA的美,Santa Monica、

Diamond Bar、Pasadena、Arcadia的情,还有夹杂其中我的辛苦,都一一浮现脑海。渐

行渐远,耳边还是那首旋律:

忘记一些忧伤,忘记一些迷惘,带着所有疯狂,带着所有勇敢……就在这灿烂的一瞬间

,我的心悄然绽放,就在这绽放

的一刹那,像荒草一样燃烧……就在这燃烧的一瞬间,我的心悄然绽放,就在这绽放的

一刹那,我和你那么辉煌……

未来总是充满不确定性,到底什么会等待着我们,没有人能够回答。但是凡此

种种都不能成为懒惰偷闲的借口,因

为精彩的人生,是要靠用心有胆量的人一点一点铺就的。这个世界从来都是不公平的,

但是每个人都有选择卓越的权利。

我总是觉得聪明不是决定因素,在高手云集的清华和加州理工,大家的智商都不会太低

。最重要的,是要懂得把握自

己,遇到困难的时候不轻言放弃,一直坚持向前走。对于流言蜚语和人与人之间的猜忌

攀比, 也要慢慢懂得理解宽容。

知道什么是自己想要的,知道什么是不可逆转的;知道用什么方式实现梦想,知道

用什么心情面对苦难,人就在转瞬

间感悟,进退得失与不离不弃也就都有了答案。

我不知道命运会把我带到什么地方,但我一直会用善良维护左右。

Jiaqi Jin

2006年11月30日于Pasadena, CA

Wednesday, June 9, 2010

Tuesday, June 8, 2010

美国公司的基本种类

注册美国公司的条件和程序

1.公司名称

可以使用字母或数字作公司名称。但其名称不得与其他公司的名称相同或相混淆,不得使用禁用语;名称后必 须加"有限"、"股份公司"等字样或其缩写。外国公司在美国的分公司可在名称前加上"美国"与其母公司相区别。

2.注册资本

美国各州一般不要求注册资本最低额。除金融公司外,无法定储蓄金要求。股东可用现金、产业、劳力或技术 入股,其认购价值要由董事会确定。股份可以分不同种类,例如有些股份可以有优先分利而无投票权,以适应某些单纯想投资入该公司而不打算参与任何股东决策的 人。股份的分类和结算与税赋责任息息相关,有必要 向熟悉税法的专业人士事先咨询。

3.经营范围与经营方式

企业的经营范围除毒品,枪械和电视,广播,金融,新闻出版,航空等之外,一般不作限制。经营方式一般也 无限制。

注册公司程序

1.选择公司名称

为了防止重复使用别人已注册的公司名称和误导公众,各州政府注册部门在受理你的注册事宜之前会对你的公 司名称进行检索。只有在确认该名称没有在先注册的情况下,才会批准登记新的公司。为了避免时间延误,你可以同时递交多个候选名称。

2.发起人签署公司章程 (Article of Incorporation)

章程包括公司名称、股份结构、董事、经营业务范围等要点。公司章程和细则(by-law) ,对公司及其成员均有约束力。一般认为其作用是公 司与成员以及成员彼此之间的一种合约。每一公司章程必须包括:公司名称;公司注册股本,即公司名义上最高募集的股本总额,但是可以因应情况需要增加或减 少;其它条款,包括股份种类及每种股票最高发行量,与及股份被赋予的限制及特权。公司章程并无解释公司细则的功用。但该条例所列的公司细则范围, 其内容包括划定公司成员的权利,公司的运作方式,公司董事的权力与责任。不过,细则一般不可与章程相抵触。

3.填报申请表

注明公司的注册地址,董事和公司秘书的姓名、地址。公司秘书一职不可或缺,主要用来接收政府公文,商业 函件,税务通知和法院传票,他可以由你的律师兼任。用律师作为公司秘书的好处在于:一有任何法律文件送达,你的律师可以立刻与你联络或替你采取适当的行动 去保护公司的利益。大部分州规定股东或董事人数可以低至一人,且可以是外国人,毋须美国公民身份。如果公司只有一位股东,他甚至可以兼任董事长和秘书。

4.交纳注册费

各州政府收取的注册费不尽相同,介于几十美元到几百美元之间。以犹它州注册有限责任公司LLC为例:初始注册费为52美元,年度续注12美元,名称检核22美元,商标登记22美元。加上其它杂项支出,总体 费用大约为150美元。

注册机构颁发营业执照即标志公司成立。从申请到注册完成,一般需要三两个星期。在犹他州,如果你愿意付75美元的加急费,一个星期内可完 成全部注册手续。若要赶时间,可以从律师处购买预先注册好的空壳公司。购买费用会贵一些,但可马上交付使用。

公 司需要向城市建筑房屋管理部门获取商业招牌许可证后,才能够公开悬挂或展示公司招牌。作为招牌用的萤虹灯或灯箱,一般需先申请许可证。

按照法律规定,公司在注册之后,每年都要向州公司注册处呈交年报, 报告公司的董事名单,地址,及缴纳续注费用。若两年欠交年报,便会被取消注册。

除个人所有公司 (Sole Proprietorship)外的所有商业机构必须向税务局(IRS)索取雇主识别号码及填写SS-4表格。雇主识别号码又有称公 司税号,成立公司以后,是开设银行商业帐号,报税必须提供的资料之一。如个人所有公司的雇主要为其员工报税或建立退休计划,也需先申请雇主识别号码。一般 的零售、批发商及提供事业服务的公司都需向各州的财税部申请销售税号码 ( SALES TAX ) 并填写DTF - 17表 格。

在美国雇用职员,雇主必须代扣受雇人员的所得税。包括:社会福利税, 医疗保健制度税,联邦政府失业补助金,州政府失业补助金,残疾保险,受雇人员赔偿金保险等。美国法 律还规定,雇主必须在每年二月向社会安全局提交所有员工W-2工资收入报表和报税证明文件。

注册公司后,你基本上可以经营任何合法的生意。但你必须每季度向税 务局报缴利得税。如过期缴费,政府将给予严历的惩罚。如果暂时未开始经营,应填表通知税务局。

公司注册,呈交年报以及财务报税的工作,一般均可由律师代办,当然 你也可以亲力而为。不同的公司模式以及不同的股权结构,会产生不同的法定權利和稅務責任,在成立公司的过程中,你必須仔细研究,认真諮詢。

Sunday, June 6, 2010

Friday, June 4, 2010

Wednesday, June 2, 2010

Monday, May 31, 2010

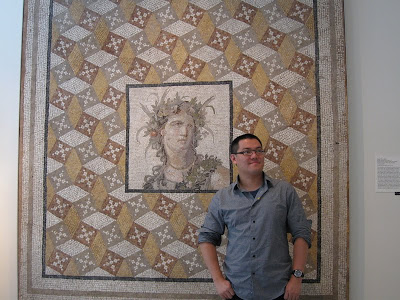

Roman Mosaic Art, 2nd century AD, NYC Metropolitan Museum

For me, the most impressive piece of art in NYC Metropolitan Museum, is this one:

Mosaic floor panel

Roman, Imperial, 2nd century AD,

Excavated from a villa at Daphne near Antioch, the metropolis of Roman Syria

The root of "science" which is dominant world-widely today, stems from ancient Greek, also know as western culture. As a Chinese, I'd like to think of why the ancient Chinese culture which had dominated the world for centuries faded out in 19th century.

This Roman mosaic panel, ~ 100AD, in some sense, reflects the different thoughts (way of thinking) between eastern and western cultures.

Analytic is the major philosophy of science. Calculus is a good example of it. Analytic power provides a "scalable" way for research that can be inherited by next generation, which is lack in the eastern culture.

In this panel, thousands of tiny mosaics contribute to the holistic view of the "imaginary & continuous" object, which is believed to be a celebrity in that era. This provides a way of breaking down an infinitely impossible problem into some scalable and discrete steps. If the size of the mosaics are small enough, then we could approximate that "imaginary & continuous" object/concept to an acceptable extend.

On the other hand, Chinese culture or art prefer to capturing a holistic view of an object, in some abstract and implicit way ( which is believed to be the ultimately decent way of solving problems), like the wash drawing / paintings, and Chinese medicines. In a world of small scale, this way is good enough to achieve the engineering goal, but as the problem approaching to a larger scale, it is not a preferable way.

For nowadays, science with analytic power seems to be the best way and it dominates. The nice thing I found in the NYC Metro is that, this piece of art in the 100AD has a quick and direct answer.

Friday, May 21, 2010

Wednesday, May 19, 2010

Oxford Buildings Dataset

Oxford Buildings Dataset

http://www.robots.ox.ac.uk/~vgg/data/oxbuildings/index.html

Friday, May 14, 2010

Scaling data attributes before using SVM!

Scaling before applying SVM is very important. Part 2 of Sarle's Neural Networks

FAQ Sarle (1997) explains the importance of this and most of considerations also apply

to SVM. The main advantage of scaling is to avoid attributes in greater numeric

ranges dominating those in smaller numeric ranges. Another advantage is to avoid

numerical di culties during the calculation. Because kernel values usually depend on

the inner products of feature vectors, e.g. the linear kernel and the polynomial kernel,

large attribute values might cause numerical problems. We recommend linearly

scaling each attribute to the range [-1; +1] or [0; 1].

Of course we have to use the same method to scale both training and testing

data. For example, suppose that we scaled the rst attribute of training data from

[-10; +10] to [-1; +1]. If the rst attribute of testing data lies in the range [-11; +8],

we must scale the testing data to [-1:1; +0:8]. See Appendix B for some real examples.

Tuesday, May 11, 2010

图 ˙谱˙马尔可夫过程˙聚类结构 (zz)

http://dahua.spaces.live.com/

图 ˙谱˙马尔可夫过程˙聚类结构

题目中所说到的四个词语,都是Machine Learning以及相关领域中热门的研究课题。表面看属于不同的topic,实际上则是看待同一个问题的不同角度。不少文章论述了它们之间的一些联系, 让大家看到了这个世界的奇妙。

从图说起

这里面,最简单的一个概念就是“图”(Graph),它用于表示事物之间的相互联系。每个图有一批节点(Node),每个节点表示一个对 象,通过一些边(Edge)把这些点连在一起,表示它们之间的关系。就这么一个简单的概念,它对学术发展的意义可以说是无可估量的。几乎所有领域研究的东 西,都是存在相互联系的,通过图,这些联系都具有了一个统一,灵活,而又强大的数学抽象。因此,很多领域的学者都对图有着深入探讨,而且某个领域关于图的 研究成果,可以被其它领域借鉴。

矩阵表示:让代数进入图的世界

在数学上,一种被普遍使用的表达就是邻接矩阵(Adjacency Matrix)。一个有N个节点的图,可以用一个N x N的矩阵G表示,G(i, j)用一个值表示第i个节点和第j个节点的联系,通常来说这个值越大它们关系越密切,这个值为0表示它们不存在直接联系。这个表达,很直接,但是非常重 要,因为它把数学上两个非常根本的概念联系在一起:“图”(Graph)和“矩阵”(Matrix)。矩阵是代数学中最重要的概念,给了图一个矩阵表达, 就建立了用代数方法研究图的途径。数学家们几十年前开始就看到了这一点,并且开创了数学上一个重要的分支——代数图论(Algebraic Graph Theory)。

代数图论通过图的矩阵表达来研究图。熟悉线性代数的朋友知道,代数中一个很重要的概念叫做“谱”(Spectrum)。一个矩阵的很多特性 和它的谱结构——就是它的特征值和特征向量是密切相关的。因此,当我们获得一个图的矩阵表达之后,就可以通过研究这个矩阵的谱结构来研究图的特性。通常, 我们会分析一个图的邻接矩阵(Adjacency Matrix)或者拉普拉斯矩阵(Laplace Matrix)的谱——这里多说一句,这两种矩阵的谱结构刚好是对称的。

谱:“分而治之”的代数

谱,这个词汇似乎在不少地方出现过,比如我们可能更多听说的频谱,光谱,等等。究竟什么叫“谱”呢?它的概念其实并不神秘,简单地说,谱这 个概念来自“分而治之”的策略。一个复杂的东西不好直接研究,就把它分解成简单的分量。如果我们把一个东西看成是一些分量叠加而成,那么这些分量以及它们 各自所占的比例,就叫这个东西的谱。所谓频谱,就是把一个信号分解成多个频率单一的分量。

矩阵的谱,就是它的特征值和特征向量,普通的线性代数课本会告诉你定义:如果A v = c v,那么c 就是A的特征值,v就叫特征向量。这仅仅是数学家发明的一种数学游戏么?——也许有些人刚学这个的时候,并一定能深入理解这么个公式代表什么。其实,这里 的谱,还是代表了一种分量结构,它为使用“分而治之”策略来研究矩阵的作用打开了一个重要途径。这里我们可以把矩阵理解为一个操作(operator), 它的作用就是把一个向量变成另外一个向量:y = A x。对于某些向量,矩阵对它的作用很简单,A v = cv,相当于就把这个向量v 拉长了c倍。我们把这种和矩阵A能如此密切配合的向量v1, v2, ... 叫做特征向量,这个倍数c1, c2, ...叫特征值。那么来了一个新的向量x 的时候,我们就可以把x 分解为这些向量的组合,x = a1 v1 + a2 v2 + ...,那么A对x的作用就可以分解了:A x = A (a1 v1 + a2 v2 + ...) = a1 c1 v1 + a2 c2 v2 ... 所以,矩阵的谱就是用于分解一个矩阵的作用的。

这里再稍微延伸一点。一个向量可以看成一个关于整数的函数,就是输入i,它返回v( i )。它可以延伸为一个连续函数(一个长度无限不可数的向量,呵呵),相应的矩阵 A 变成一个二元连续函数(面积无限大的矩阵)。这时候矩阵乘法中的求和变成了积分。同样的,A的作用可以理解为把一个连续函数映射为另外一个连续函数,这时 候A不叫矩阵,通常被称为算子。对于算子,上面的谱分析方法同样适用(从有限到无限,在数学上还需要处理一下,不多说了)——这个就是泛函分析中的一个重 要部分——谱论(Spectral Theory)。

马尔可夫过程——从时间的角度理解图

回到“图”这个题目,那么图的谱是干什么的呢?按照上面的理解,似乎是拿来分解一个图的。这里谱的作用还是分治,但是,不是直观的理解为把 图的大卸八块,而是把要把在图上运行的过程分解成简单的过程的叠加。如果一个图上每个节点都有一个值,那么在图上运行的过程就是对这些值进行更新的过程。 一个简单,大家经常使用的过程,就是马尔可夫过程(Markov Process)。

学过随机过程的朋友都了解马尔可夫过程。概念很简单——“将来只由现在决定,和过去无关”。考虑一个图,图上每个点有一个值,会被不断更 新。每个点通过一些边连接到其它一些点上,对于每个点,这些边的值都是正的,和为1。在图上每次更新一个点的值,就是对和它相连接的点的值加权平均。如果 图是联通并且非周期(数学上叫各态历经性, ergodicity),那么这个过程最后会收敛到一个唯一稳定的状态(平衡状态)。

图上的马尔可夫更新过程,对于很多学科有着非常重要的意义。这种数学抽象,可以用在什么地方呢?(1) Google对搜索结果的评估(PageRank)原理上依赖于这个核心过程,(2) 统计中一种广泛运用的采样过程MCMC,其核心就是上述的转移过程,(3) 物理上广泛存在的扩散过程(比如热扩散,流体扩散)和上面的过程有很重要的类比,(4) 网络中的信息的某些归纳与交换过程和上述过程相同 (比如Random Gossiping),还有很多。非常多的实际过程通过某种程度的简化和近似,都可以归结为上述过程。因此,对上面这个核心过程的研究,对于很多现象的理 解有重要的意义。各个领域的科学家从本领域的角度出发研究这个过程,得出了很多实质上一致的结论,并且很多都落在了图的谱结构的这个关键点上。

图和谱在此联姻

根据上面的定义,我们看到邻接矩阵A其实就是这个马尔可夫过程的转移概率矩阵。我们把各个节点的值放在一起可以得到一个向量v,那么我们就 可以获得对这个过程的代数表示, v(t+1) = A v(t)。稳定的时候,v = A v。我们可以看到稳定状态就是A的一个特征向量,特征值就是1。这里谱的概念进来了。我们把A的特征向量都列出来v1, v2, ...,它们有 A vi = ci vi。vi其实就是一种很特殊,但是很简单的状态,对它每进行一轮更新,所有节点的值就变成原来的ci倍。如果0 < ci < 1,那么,相当于所有节点的值呈现指数衰减,直到大家都趋近于0。

一般情况下,我们开始于一个任意一个状态u,它的更新过程就没那么简单了。我们用谱的方法来分析,把u分解成 u = v1 + c2 v2 + c3 v3 + ... (在数学上可以严格证明,对于上述的转移概率矩阵,最大的特征值就是1,这里对应于平衡状态v1,其它的特征状态v2, v3, ..., 对应于特征值1 > c2 > c3 > ... > -1)。那么,我们可以看到,当更新进行了t 步之后,状态变成 u(t) = v1 + c2^t v2 + c3^t v3 + ...,我们看到,除了代表平衡状态的分量保持不变外,其它分量随着t 增长而指数衰减,最后,其它整个趋近于平衡状态。

从上面的分析看到,这个过程的收敛速度,其实是和衰减得最慢的那个非平衡分量是密切相关的,它的衰减速度取决于第二大特征值c2,c2的大 小越接近于1,收敛越慢,越接近于0,收敛越快。这里,我们看到了谱的意义。第一,它帮助把一个图上运行的马尔可夫过程分解为多个简单的字过程的叠加,这 里面包含一个平衡过程和多个指数衰减的非平衡过程。第二,它指出平衡状态是对应于最大特征值1的分量,而收敛速度主要取决于第二大特征值。

我们这里知道了第二大特征值c2对于描述这个过程是个至关重要的量,究竟是越大越好,还是越小越好呢?这要看具体解决的问题。如果你要设计 一个采样过程或者更新过程,那么就要追求一个小的c2,它一方面提高过程的效率,另外一方面,使得图的结构改变的时候,能及时收敛,从而保证过程的稳定。 而对于网络而言,小的c2有利于信息的迅速扩散和传播。

聚类结构——从空间的角度理解图

c2的大小往往取决于图上的聚类结构。如果图上的点分成几组,各自聚成一团,缺乏组与组之间的联系,那么这种结构是很不利于扩散的。在某些 情况下,甚至需要O(exp(N))的时间才能收敛。这也符合我们的直观想象,好比两个大水缸,它们中间的只有一根很细的水管相连,那么就需要好长时间才 能达到平衡。有兴趣的朋友可以就这个水缸问题推导一下,这个水缸系统的第二大特征值和水管流量与水缸的容积的比例直接相关,随比例增大而下降。

对于这个现象进行推广,数学上有一个重要的模型叫导率模型(Conductance)。具体的公式不说了,大体思想是,节点集之间的导通量 和节点集大小的平均比例和第二大特征值之间存在一个单调的上下界关系。导率描述的是图上的节点连接的空间结合,这个模型把第二特征值c2和图的空间聚集结 构联系在一起了。

图上的聚类结构越明显, c2越大;反过来说,c2越大,聚类的结构越明显,(c2 = 1)时,整个图就断裂成非连通的两块或者多块了。从这个意义上说,c2越大,越容易对这个图上的点进行聚类。机器学习中一个重要课题叫做聚类,近十年来, 基于代数图论发展出来的一种新的聚类方法,就是利用了第二大特征值对应的谱结构,这种聚类方法叫做谱聚类(Spectral Clustering)。它在Computer Vision里面对应于一种著名的图像分割方法,叫做Normalized Cut。很多工作在使用这种方法。其实这种方法的成功,取决于c2的大小,也就是说取决于我们如何构造出一个利于聚类的图,另外c2的值本身也可以作为衡 量聚类质量,或者可聚类性的标志。遗憾的是,在paper里面,使用此方法者众,深入探讨此方法的内在特点者少。

归纳起来

图是表达事物关系和传递扩散过程的重要数学抽象

图的矩阵表达提供了使用代数方法研究图的途径

谱,作为一种重要的代数方法,其意义在于对复杂对象和过程进行分解

图上的马尔可夫更新过程是很多实际过程的一个重要抽象

图的谱结构的重要意义在于通过它对马尔可夫更新过程进行分解分析

图的第一特征值对应于马尔可夫过程的平衡状态,第二特征值刻画了这个过程的收敛速度(采样的效率,扩散和传播速度,网络的稳定程度)。

图的第二特征分量与节点的聚类结构密切相关。可以通过谱结构来分析图的聚类结构。

马尔可夫过程代表了一种时间结构,聚类结构代表了一种空间结构,“谱”把它们 联系在一起了,在数学刻画了这种时与空的深刻关系。

Naive Bayes classifier notes

therefore, b4 we construct the NB, we need to do a correlation analysis for whole feature set, and if we identify some high correlated features, we should only pick one of them from that subset.

Otherwise, it'll be a lousy NB classifier.

Tuesday, May 4, 2010

some interesting UCRS research

http://www.cs.ucr.edu/~bcampana/

Saturday, May 1, 2010

SDM 2010 summary

Here are some good presentations:

1, L1 sparse tutorial by Jieping Ye: provides lots of insightful and uptodated resources, amazing part is about that separable non-smooth penalty from the loss func.

2, Best Paper Award

Fast Single-Pair SimRank Computation, Pei Li, Renmin University of China;

this paper improves the simrank algorithm, but badly presented.

3, Best Student Paper Award

A Compression Based Distance Measure for Texture

Authors: Bilson J. Campana and Eamonn Keogh, University of California, Riverside.

Impressive part is the constant time figure, and the authors make the PPT with lots of cartoon, (but as well as making it a bit childish....)

4, social network and graph ming.

Now it's the era of social network and graph ming.... lots of papers in this topic. Esp. heterogeneous biological network label propagation.

Monday, April 26, 2010

Locality Sensitive Hashing collection

http://people.csail.mit.edu/gregory/download.html

Paper

http://www.mit.edu/~andoni/LSH/

Weakly Supervised Learning

Optimal Integration of Labels from Labelers of

Unknown Expertise

http://mplab.ucsd.edu/~jake/OptimalLabeling.pdf

Monday, April 12, 2010

recursively print out a clockwise matrix element

1 2 3 4 5 6 7 8 9 10

36 37 38 39 40 41 42 43 44 11

35 64 65 66 67 68 69 70 45 12

34 63 84 85 86 87 88 71 46 13

33 62 83 96 97 98 89 72 47 14

32 61 82 95 100 99 90 73 48 15

31 60 81 94 93 92 91 74 49 16

30 59 80 79 78 77 76 75 50 17

29 58 57 56 55 54 53 52 51 18

28 27 26 25 24 23 22 21 20 19

#include

#include

#include

// Print clockwise matrix element, in C

// www.VictorFang.com

// 20100412

// n : current recursive call's matrix size

// x, y: start pt in this round

// start: first value to set in the matrix

void setmatrix(int** m, int x, int y, int start, int n){

int i,j;

// for even number

if(n<=0)

return;

// for odd number

if(n==1){

m[x][y] = start;

return;

}

for(i = x; i

m[y][i] = start++; // upper, to the right

for(j = y; j < y+n-1; j++ )

m[j][x+n-1] = start++; // right , downward

for(i=x+n-1; i>x; i--)

m[x+n-1][i] = start++; // lower, to the left

for(j = y+n-1; j>y; j--)

m[j][x] = start++; // left, upward

// recursive call the next inner cycle

setmatrix(m, x+1, y+1, start, n-2);

}

void main() {

FILE *fp;

int n = 10; // size of matrix, 5x5;

int i , j;

int** matrix = (int **) malloc( n*sizeof(int *) );

for(i = 0; i

matrix[i] = (int *) malloc(n*sizeof(int));

for(i = 0; i

for(j = 0; j

matrix[i][j] = 0;

// do the job!

setmatrix(matrix, 0 , 0, 1, n);

char fstr[10] ;

sprintf(fstr, "%d.txt", n);

fp = fopen(fstr, "w");

for(i = 0; i

for(j = 0; j

printf("%4d ", matrix[i][j]);

fprintf(fp, "%4d ", matrix[i][j]);

}

printf("\n");

fprintf(fp, "\n");

}

fclose(fp);

printf("Result is written into file: %s\n", fstr);

getchar();

}

Saturday, March 27, 2010

php server (on Win7 ) working finally

Today i wasted sometime on this.

Originally I've installed PHP5 and MYSQL before, so WAMP is actually installing everything within its own package into its folder.

Beware! You should uninstall the previous PHP , clear and clean , to reduce troubles.....

My case is, I uninstalled the old PHP5 on Control Panel, then go ahead and install WAMP, everything works just fine, except a weird bug!

Whenever how i config the files in wamp, the php NEVER print out the error message!!!!

I searched around and still cannot find the solution, until 10 min ago, I thought maybe the uninstallation is not finished completely? Then I took a look at the old PHP folder! It's still there!!! Besides, the php.ini is in that old folder!!!

Oh Jesus Christ!!! xxxxxxxxxxxx

I deleted the whole old folder, and restart wamp, bingo! It works !

Sunday, March 21, 2010

some engineering ways to hack MD5 hash

5MB words:

http://www.md5decrypter.com/

7GB words:

http://www.md5decrypter.co.uk/

MD5 will take whatever length of string, and hash it into a 128bit value as "signature" for that string.

Practically, if we store all these 128 bit values, and use it as index to build a database, and item value as the short password, this would take space complexity of:

2^128 = 10^38

which is too large practically, but if we can "hash" it again using the "md5_128bit_value" as the key, and item values as the originally cleartext, then bingo!

Patrick also mentioned that we could first sort these 128 bit key, and then do a binary search for the given query "md5_128bit_value" . But it still takes too much space ...... up to 10^38....

Hmmmm... A lot of forums are using MD5 for encrypting the passwords, it would be wise to test your MD5 value for your password in those MD5 hacker websites before you hand it over to your forum...... like

www.ucbbs.com

Wednesday, March 17, 2010

NETFLIX PRIZE 1M$ gone! Sep 2009.

Machine learning seeks the recommendation out from the chaos of the Netflix huge dataset!

http://www.netflixprize.com/community/viewtopic.php?id=1537

It is our great honor to announce the $1M Grand Prize winner of the Netflix Prize contest as team BellKor’s Pragmatic Chaos for their verified submission on July 26, 2009 at 18:18:28 UTC, achieving the winning RMSE of 0.8567 on the test subset. This represents a 10.06% improvement over Cinematch’s score on the test subset at the start of the contest. We congratulate the team of Bob Bell, Martin Chabbert, Michael Jahrer, Yehuda Koren, Martin Piotte, Andreas Töscher and Chris Volinsky for their superb work advancing and integrating many significant techniques to achieve this result.

The Prize was awarded in a ceremony in New York City on September 21st, 2009. We will post a video on this forum of the presentation the team delivered about their Prize algorithm. In accord with the Rules the winning team has prepared a system description consisting of three papers, which we both make public below.

Team BellKor’s Pragmatic Chaos edged out team The Ensemble with the winning submission coming just 24 minutes before the conclusion of the nearly three-year-long contest. Historically the Leaderboard has only reported team scores on the quiz subset. The Prize is awarded based on teams' test subset score. Now that the contest is closed we will be updating the Leaderboard to report team scores on both the test and quiz subsets.

To everyone who participated in the Netflix Prize: You've made this a truly remarkable contest and you've brought great innovation to the field. We applaud you for your contributions and we hope you've enjoyed the journey. The Netflix Prize contest is now closed.

We will soon be launching a new contest, Netflix Prize 2. Stay tuned for more details.

The winning team’s papers submitted to the judges can be found below. These papers build on, and require familiarity with, work published in the 2008 Progress Prize.

Y. Koren, "The BellKor Solution to the Netflix Grand Prize", (2009).

A. Töscher, M. Jahrer, R. Bell, "The BigChaos Solution to the Netflix Grand Prize", (2009).

M. Piotte, M. Chabbert, "The Pragmatic Theory solution to the Netflix Grand Prize", (2009).

Tuesday, March 16, 2010

Google interview question: Throw 2 eggs on 100 storied building

Underlying fact: if the thrown egg is unbroken, actually you could grab it and reuse it!

Ravi and I spent sometime today discussing it, with different solutions.

1, binary search is optimal when you have lots of eggs and achieving log2(n) complexity, but it's not the best way for this condition : only 2 eggs.

2, linear scanning. Assume the 100 level building is segmented into sections length of x, then we have floor(100/x) sections. First, start from the x th level and throw the 1st egg, if it is not broken, then go up x levels. If it breaks, then going inside that section below, and start from the bottom of that section, linearly upward until the egg breaks.

The number of trials f(x) in worst case is written as

f(x) = floor(100/x) + x;

it's easy to see that the optimal f(x) happens when x = 10, and f(x) = 20.

Yet, it's good enough, but not the optimal solution for this problem!

3, notice that the above solution can be seen as "double linear" scanning, which is something we will attack in this improved version:

Instead of considering equal length sections, notice that what if we make unequal sections? furthermore, how about decreasing # of levels in each sections when going upwards? Also notice that at the beginning, we need to ( almost always) start from the lowest level, why not try to "skip" more at the bottom sections?

Denote "outside" as #trials trying to identify the sections, and "inside" as #trials trying to identify within that section, we have a tradeoff to make here:

"outside" + "inside" == constant

meaning that when you spent more trials on "outside", you should not spent too much trials on "inside", otherwise you are not likely to improve.

Here we go!

Assume we have :

(x) + (x-1) + (x-2) + ... + (1) <=100

where each ( ) is the section length.

solve for:

sum_i=1 ^ x {i}<=100, we could use google calculator to compute:

sqrt(201) = 14.1774469

so the bottom section length is around roughly 14, and the respective section lengths upwards are 13, 12, 11, ....,1.Bingo! See the magic here?!

so the strategy is similar fashioned, first decide the "outside" section until the 1st egg breaks, then dive inside that below section, and linearly upwards, throw the 2nd egg...

e.g. when the 1st egg breaks at 14th level, we spend 1 trial to decide the "outside" section, then spend the 2nd egg starting throwing from 1st level. So the worst case here is when the level is 13th, then we have to use up 13+1 = 14 trials.

This one is actually the upperbound for our formulation! Remember that tradeoff ?

Therefore we've achieved the "egg salvation" google brainteaser !

Thanks for the show! :D

Saturday, March 13, 2010

play piano notes in Matlab

Quite interesting!

for n = [1,3,5,6,8,10,12,13], sine_tone(440*2^(n/12));end

function sin_tone(freq)

fs=8192;

t=[0:1/fs:1];

y = sin(2*pi*freq*t);

soundsc(y)

Monday, March 1, 2010

Torsten Reil: Animating neurobiologist

From modeling the mayhem of equine combat in Lord of the Rings: Return of the King to animating Liberty City gun battles in Grand Theft Auto IV, Torsten Reil's achievements are all over the map these days. Software that he helped create (with NaturalMotion, the imaging company he co-founded) has revolutionized computer animation of human and animal avatars, giving rise to some of the most breathtakingly real sequences in the virtual world of video games and movies- and along the way given valuable insight into the way human beings move their bodies.

Reil was a neural researcher working on his Masters at Oxford, developing computer simulations of nervous systems based on genetic algorithms- programs that actually used natural selection to evolve their own means of locomotion. It didn't take long until he realized the commercial potential of these lifelike characters. In 2001 he capitalized on this lucrative adjunct to his research, and cofounded NaturalMotion. Since then the company has produced motion simulation programs like Euphoria and Morpheme, state of the art packages designed to drastically cut the time and expense of game development, and create animated worlds as real as the one outside your front door. Animation and special effects created with Endorphin (NaturalMotion's first animation toolkit) have lent explosive action to films such as Troy and Poseidon, and NaturalMotion's software is also being used by LucasArts in video games such as the hotly anticipated Indiana Jones.

But there are serious applications aside from the big screen and the XBox console: NaturalMotion has also worked under a grant from the British government to study the motion of a cerebral palsy patient, in hopes of finding therapies and surgeries that dovetail with the way her nervous system is functioning.

"It might be surprising to find a biologist pushing the frontiers of computer animation. But Torsten Reil is bringing cheaper, lifelike digital characters to video games and films."Technology Review

Sunday, February 28, 2010

beaver engineer

I just watched the National Geographics TV series for Beaver Engineer, very impressive documentary movie.

Monday, February 1, 2010

AI conference ranking

AREA: Artificial Intelligence and Related Subjects

http://www3.ntu.edu.sg/home/ASSourav/crank.htm

Rank 1:

AAAI: American Association for AI National Conference

CVPR: IEEE Conf on Comp Vision and Pattern Recognition

IJCAI: Intl Joint Conf on AI

ICCV: Intl Conf on Computer Vision

ICML: Intl Conf on Machine Learning

KDD: Knowledge Discovery and Data Mining

KR: Intl Conf on Principles of KR & Reasoning

NIPS: Neural Information Processing Systems

UAI: Conference on Uncertainty in AI

AAMAS: Intl Conf on Autonomous Agents and Multi-Agent Systems (past: ICAA)

ACL: Annual Meeting of the ACL (Association of Computational Linguistics)

Rank 2:

NAACL: North American Chapter of the ACL

AID: Intl Conf on AI in Design

AI-ED: World Conference on AI in Education

CAIP: Inttl Conf on Comp. Analysis of Images and Patterns

CSSAC: Cognitive Science Society Annual Conference

ECCV: European Conference on Computer Vision

EAI: European Conf on AI

EML: European Conf on Machine Learning

GECCO: Genetic and Evolutionary Computation Conference (used to be GP)

IAAI: Innovative Applications in AI

ICIP: Intl Conf on Image Processing

ICNN/IJCNN: Intl (Joint) Conference on Neural Networks

ICPR: Intl Conf on Pattern Recognition

ICDAR: International Conference on Document Analysis and Recognition

ICTAI: IEEE conference on Tools with AI

AMAI: Artificial Intelligence and Maths

DAS: International Workshop on Document Analysis Systems

WACV: IEEE Workshop on Apps of Computer Vision

COLING: International Conference on Computational Liguistics

EMNLP: Empirical Methods in Natural Language Processing

EACL: Annual Meeting of European Association Computational Lingustics

CoNLL: Conference on Natural Language Learning

DocEng: ACM Symposium on Document Engineering

IEEE/WIC International Joint Conf on Web Intelligence and Intelligent Agent Technology

ICDM - IEEE International Conference on Data Mining

Rank 3:

PRICAI: Pacific Rim Intl Conf on AI

AAI: Australian National Conf on AI

ACCV: Asian Conference on Computer Vision

AI*IA: Congress of the Italian Assoc for AI

ANNIE: Artificial Neural Networks in Engineering

ANZIIS: Australian/NZ Conf on Intelligent Inf. Systems

CAIA: Conf on AI for Applications

CAAI: Canadian Artificial Intelligence Conference

ASADM: Chicago ASA Data Mining Conf: A Hard Look at DM

EPIA: Portuguese Conference on Artificial Intelligence

FCKAML: French Conf on Know. Acquisition & Machine Learning

ICANN: International Conf on Artificial Neural Networks

ICCB: International Conference on Case-Based Reasoning

ICGA: International Conference on Genetic Algorithms

ICONIP: Intl Conf on Neural Information Processing

IEA/AIE: Intl Conf on Ind. & Eng. Apps of AI & Expert Sys

ICMS: International Conference on Multiagent Systems

ICPS: International conference on Planning Systems

IWANN: Intl Work-Conf on Art & Natural Neural Networks

PACES: Pacific Asian Conference on Expert Systems

SCAI: Scandinavian Conference on Artifical Intelligence

SPICIS: Singapore Intl Conf on Intelligent System

PAKDD: Pacific-Asia Conf on Know. Discovery & Data Mining

SMC: IEEE Intl Conf on Systems, Man and Cybernetics

PAKDDM: Practical App of Knowledge Discovery & Data Mining

WCNN: The World Congress on Neural Networks

WCES: World Congress on Expert Systems

ASC: Intl Conf on AI and Soft Computing

PACLIC: Pacific Asia Conference on Language, Information and Computation

ICCC: International Conference on Chinese Computing

ICADL: International Conference on Asian Digital Libraries

RANLP: Recent Advances in Natural Language Processing

NLPRS: Natural Language Pacific Rim Symposium

Meta-Heuristics International Conference

Rank 3:

NNSP: Neural Networks for Signal Processing

ICASSP: IEEE Intl Conf on Acoustics, Speech and SP

GCCCE: Global Chinese Conference on Computers in Education

ICAI: Intl Conf on Artificial Intelligence

AEN: IASTED Intl Conf on AI, Exp Sys & Neural Networks

WMSCI: World Multiconfs on Sys, Cybernetics & Informatics

LREC: Language Resources and Evaluation Conference

AIMSA: Artificial Intelligence: Methodology, Systems, Applications

AISC: Artificial Intelligence and Symbolic Computation

CIA: Cooperative Information Agents

International Conference on Computational Intelligence for Modelling, Control and Automation

Pattern Matching

ECAL: European Conference on Artificial Life

EKAW: Knowledge Acquisition, Modeling and Management

EMMCVPR: Energy Minimization Methods in Computer Vision and Pattern Recognition

EuroGP: European Conference on Genetic Programming

FoIKS: Foundations of Information and Knowledge Systems

IAWTIC: International Conference on Intelligent Agents, Web Technologies and Internet Commerce

ICAIL: International Conference on Artificial Intelligence and Law

SMIS: International Syposium on Methodologies for Intelligent Systems

IS&N: Intelligence and Services in Networks

JELIA: Logics in Artificial Intelligence

KI: German Conference on Artificial Intelligence

KRDB: Knowledge Representation Meets Databases

MAAMAW: Modelling Autonomous Agents in a Multi-Agent World

NC: ICSC Symposium on Neural Computation

PKDD: Principles of Data Mining and Knowledge Discovery

SBIA: Brazilian Symposium on Artificial Intelligence

Scale-Space: Scale-Space Theories in Computer Vision

XPS: Knowledge-Based Systems

I2CS: Innovative Internet Computing Systems

TARK: Theoretical Aspects of Rationality and Knowledge Meeting

MKM: International Workshop on Mathematical Knowledge Management

ACIVS: International Conference on Advanced Concepts For Intelligent Vision Systems

ATAL: Agent Theories, Architectures, and Languages

LACL: International Conference on Logical Aspects of Computational Linguistics

AI conference ranking

AREA: Artificial Intelligence and Related Subjects

Rank 1:

AAAI: American Association for AI National Conference

CVPR: IEEE Conf on Comp Vision and Pattern Recognition

IJCAI: Intl Joint Conf on AI

ICCV: Intl Conf on Computer Vision

ICML: Intl Conf on Machine Learning

KDD: Knowledge Discovery and Data Mining

KR: Intl Conf on Principles of KR & Reasoning

NIPS: Neural Information Processing Systems

UAI: Conference on Uncertainty in AI

AAMAS: Intl Conf on Autonomous Agents and Multi-Agent Systems (past: ICAA)

ACL: Annual Meeting of the ACL (Association of Computational Linguistics)

Rank 2:

NAACL: North American Chapter of the ACL

AID: Intl Conf on AI in Design

AI-ED: World Conference on AI in Education

CAIP: Inttl Conf on Comp. Analysis of Images and Patterns

CSSAC: Cognitive Science Society Annual Conference

ECCV: European Conference on Computer Vision

EAI: European Conf on AI

EML: European Conf on Machine Learning

GECCO: Genetic and Evolutionary Computation Conference (used to be GP)

IAAI: Innovative Applications in AI

ICIP: Intl Conf on Image Processing

ICNN/IJCNN: Intl (Joint) Conference on Neural Networks

ICPR: Intl Conf on Pattern Recognition

ICDAR: International Conference on Document Analysis and Recognition

ICTAI: IEEE conference on Tools with AI

AMAI: Artificial Intelligence and Maths

DAS: International Workshop on Document Analysis Systems

WACV: IEEE Workshop on Apps of Computer Vision

COLING: International Conference on Computational Liguistics

EMNLP: Empirical Methods in Natural Language Processing

EACL: Annual Meeting of European Association Computational Lingustics

CoNLL: Conference on Natural Language Learning

DocEng: ACM Symposium on Document Engineering

IEEE/WIC International Joint Conf on Web Intelligence and Intelligent Agent Technology

ICDM - IEEE International Conference on Data Mining

Rank 3:

PRICAI: Pacific Rim Intl Conf on AI

AAI: Australian National Conf on AI

ACCV: Asian Conference on Computer Vision

AI*IA: Congress of the Italian Assoc for AI

ANNIE: Artificial Neural Networks in Engineering

ANZIIS: Australian/NZ Conf on Intelligent Inf. Systems

CAIA: Conf on AI for Applications

CAAI: Canadian Artificial Intelligence Conference

ASADM: Chicago ASA Data Mining Conf: A Hard Look at DM

EPIA: Portuguese Conference on Artificial Intelligence

FCKAML: French Conf on Know. Acquisition & Machine Learning

ICANN: International Conf on Artificial Neural Networks

ICCB: International Conference on Case-Based Reasoning

ICGA: International Conference on Genetic Algorithms

ICONIP: Intl Conf on Neural Information Processing

IEA/AIE: Intl Conf on Ind. & Eng. Apps of AI & Expert Sys

ICMS: International Conference on Multiagent Systems

ICPS: International conference on Planning Systems

IWANN: Intl Work-Conf on Art & Natural Neural Networks

PACES: Pacific Asian Conference on Expert Systems

SCAI: Scandinavian Conference on Artifical Intelligence

SPICIS: Singapore Intl Conf on Intelligent System

PAKDD: Pacific-Asia Conf on Know. Discovery & Data Mining

SMC: IEEE Intl Conf on Systems, Man and Cybernetics

PAKDDM: Practical App of Knowledge Discovery & Data Mining

WCNN: The World Congress on Neural Networks

WCES: World Congress on Expert Systems

ASC: Intl Conf on AI and Soft Computing

PACLIC: Pacific Asia Conference on Language, Information and Computation

ICCC: International Conference on Chinese Computing

ICADL: International Conference on Asian Digital Libraries

RANLP: Recent Advances in Natural Language Processing

NLPRS: Natural Language Pacific Rim Symposium

Meta-Heuristics International Conference

Rank 3:

NNSP: Neural Networks for Signal Processing

ICASSP: IEEE Intl Conf on Acoustics, Speech and SP

GCCCE: Global Chinese Conference on Computers in Education

ICAI: Intl Conf on Artificial Intelligence

AEN: IASTED Intl Conf on AI, Exp Sys & Neural Networks

WMSCI: World Multiconfs on Sys, Cybernetics & Informatics

LREC: Language Resources and Evaluation Conference

AIMSA: Artificial Intelligence: Methodology, Systems, Applications

AISC: Artificial Intelligence and Symbolic Computation

CIA: Cooperative Information Agents

International Conference on Computational Intelligence for Modelling, Control and Automation

Pattern Matching

ECAL: European Conference on Artificial Life

EKAW: Knowledge Acquisition, Modeling and Management

EMMCVPR: Energy Minimization Methods in Computer Vision and Pattern Recognition

EuroGP: European Conference on Genetic Programming

FoIKS: Foundations of Information and Knowledge Systems

IAWTIC: International Conference on Intelligent Agents, Web Technologies and Internet Commerce

ICAIL: International Conference on Artificial Intelligence and Law

SMIS: International Syposium on Methodologies for Intelligent Systems

IS&N: Intelligence and Services in Networks

JELIA: Logics in Artificial Intelligence

KI: German Conference on Artificial Intelligence

KRDB: Knowledge Representation Meets Databases

MAAMAW: Modelling Autonomous Agents in a Multi-Agent World

NC: ICSC Symposium on Neural Computation

PKDD: Principles of Data Mining and Knowledge Discovery

SBIA: Brazilian Symposium on Artificial Intelligence

Scale-Space: Scale-Space Theories in Computer Vision

XPS: Knowledge-Based Systems

I2CS: Innovative Internet Computing Systems

TARK: Theoretical Aspects of Rationality and Knowledge Meeting

MKM: International Workshop on Mathematical Knowledge Management

ACIVS: International Conference on Advanced Concepts For Intelligent Vision Systems

ATAL: Agent Theories, Architectures, and Languages

LACL: International Conference on Logical Aspects of Computational Linguistics